Communication is the foundation of a good relationship! We all know that. The same rule can be applied to our web services. The way they communicate with each other is the cornerstone of our architecture. That’s why we have used the REST API over HTTP as the main method of communication for a long time. We have quite a lot of experience with the REST API, yet the same question keeps appearing over and over again: How to reduce the dependency between the client and the server? How to achieve/manage developing API on the server, without compromising the client? How to make the client and server share as little data as possible?

Attempt Zero: Don’t worry about it and hard code everything

Imagine we have a server that provides this API

GET /userwhich returns a list of all active users. And now let’s imagine that we have a client, such as a front end, who needs to know the list of users. How should the frontend determine that the list of all users is available at https://example.com/api/user and not elsewhere? The easiest way is to simply code it into the frontend code and not solve it anymore. What happens if we then find out that we have the wrong resource and that it should not be / user, but / users? We will change the routing server to

GET /usersand redirect /user to /users. Then we should modify the FE code, so it doesn’t ask for old routes anymore. Can’t it be figured out that this step of adjusting the FE is not necessary?

Attempt One: Shared library

Eight years ago, we tried to solve this problem with a shared library. We had all BE services in NodeJS, FE was of course in JavaScript, so we thought we would create an NPM module that would contain definitions of all the actions that the server can handle. Whoever wants to work with this API, will simply install a library that contains all the required information for the client to use the server.

class GetUsersQuery {

this.routePattern = '/users';

this.method = 'GET';

}To illustrate, we could also write a data validation scheme for POST / PUT actions:

class SetCampaignNameCommand {

this.routePattern = '/campaigns/{id}/name';

this.method = 'PUT';

this.validationScheme = {

properties: {

id: {

type: 'string',

required: true

},

name: {

type: 'string',

required: true

}

}

};

}Benefits:

- It was actually super simple. Both FE and BE applications installed a library that contained the required definitions and thanks to them they knew where to find the required actions.

- If we modified an action, we just updated the library and gradually released applications that used the API.

- Thanks to these definitions, we were able to later generate, for example, Apiari-type documentation.

- The client – in our case mainly FE – could validate the data even before sending it to the server, and we were sure that the server would validate the data according to the same scheme.

The used solution also had its issues:

- Releasing all applications with every change in the stock descriptor is not exactly a wet dream of any developer. In addition, the release is not atomic – from time to time it happened that the server with the new definitions was out before the FE has.

- It was not entirely usable to other clients outside our company, to whom we did not want to provide libraries. Alternatively, it wasn’t even usable if we wanted to use a language other than JavaScript, such as Java.

- Sharing code between FE and BE eight years ago actually presented a challenge and was not quite common yet. That’s why sometimes the situation happened when someone modified the library and added a piece of code to it, which only ran on BE in NodeJS, but did not run on FE, and was later terribly surprised that it didn’t work on FE.

But couldn’t we do it even better? In a way we don’t have to release the client every time we update the API on the server?

Intermezzo: What is REST?

Let’s stop here for a moment and talk about what REST is. A lot of people think that REST is POSTing a new user to the collection/users (plural!) and GETing him back by address/users/123. But there are many more ideas behind REST. The basis is Roy Fielding’s dissertation, which describes REST. What we will be most interested in in this part is Hypermedia as the engine of application state (HATEOAS). What does it mean?

When you as a user come to http://seznam.cz, you will see several links in the browser. Clicks will take you to the next page, such as a car review, where you fill out and send a comment like take Ford there, but the train back. HATEOAS is the same, but for API.

The purpose of HATEOAS in the REST API is that the API itself guides you to what you can do with that API. I will borrow an example from the previously referenced article. Imagine you want to control a toaster via API. So send a request

GET /toaster HTTP/1.1to which you will receive an answer

HTTP/1.1 200 OK

{

"id": "/toaster",

"state": "off",

"operations": [{

"rel": "on",

"method": "PUT",

"href": "/toaster",

"expects": { "state": "on"}

}]

}The toaster tells you that it is currently off ("state": "off”). The interesting thing is in the operations field, where the API tells us that if we want to turn on the toaster, we have to send a PUT request to the address /toaster with data { "state": "on”}. The API tells us just like the hypertext links on http://seznam.cz. If you teach the client to understand this format, you have won. The server API dictates to the client what can be done with the resource and how it can be done. Imagine the possibilities – for each resource you can show the client that these twenty operations can be done with him. Are some operations only available to clients with higher privileges? OK, clients with lower privileges will only see fifteen operations, etc.

One does not even have to invent a custom format from scratch to be able to return data about operations. Standards such as JSON-LD, Hydra, Linked Data and many others already exist.

Attempt Two: how we wanted to make Roy Fielding proud

All enthusiastic, we tried to implement the REST in a proper way.

Our idea was that there would be one “entry point” for our APIs located at some major address such as example.com/api. Here the client finds out all that can be done with our API, so we would return something in the sense of “if you want to manage campaigns, GET /api/campaigns; if you want to manage users, GET /api/users” and so on. The client would then GET /api/users, with the list of all users, each user containing information type “for managing this user GET /api/users/123, where the client could learn that “username 123 will be changed by PUT request to /api/users/123/name” and here the client would finally send the desired PUT request to change the name.

We expected that the client would do this every time he wanted to do something with our data. Do you want to change another user’s name? OK, go nice from the beginning: GET /api → GET /api/users → GET /api/users/124 → PUT /api/users/124/name. The overabundance of the requests could be solved by the HTTP caching.

At one point, we even flirted with the idea that we would not use any readable address type /api/users/123, but rather always generate random and changing addresses of the “user 123” type with each request at /api/DF073897-E2C8-4881 -B2A5-3EA40EFEA568” to force clients to go through all the steps always nicely from the beginning. It was supposed to give us the ability to evolve the API without having to worry about breaking up a client.

The “click-through” API should have been an advantage also. The idea was that when you open /api in the browser, it will show you the API as a normal HTML page and you will see links to other routes there. So you could click and see links to /api/users, etc. An API, which also works as documentation! Do you want to know what the API can do? Open it in the browser! There will be an up-to-date API and up-to-date documentation. What more could you want?

Enough of the ideas, let’s go programming. We made ourselves a coffee, started company laptops, sat down at the keyboards, and after five minutes we abandoned the idea as being overly complicated.

Attempt Three: we tried, but…

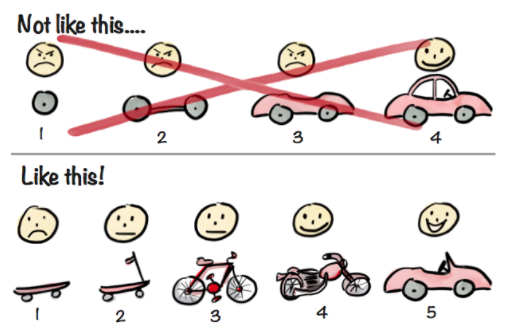

Don’t worry, we haven’t gone back to sharing libraries. There was a negotiation that resulted in the “full Fielding REST API” still being something we’d like. But there’s no time for that right now, so we’ll try to implement something that will at least get us closer to our dream. Something that will get us at least halfway there. Roughly along the lines of this picture…

…we just decided to implement… let’s say a bike. And sometime down the road, we’ll try a motorcycle or even a car. After a month of work, we had what we called an “Entry point”. We created a system of named actions, for example the “ListUsers” action represents “a query that returns a list of users”. The whole entry point then worked like this:

- At the main address of type /api, the server returned the information “for the ListUsers action data, GET /api/actions/ListUsers” to the client.

- At the /api/actions/ListUsers address we then returned the action information, in approximately the following form:

{

"endpoint": {

"method": "GET",

"route": "/users",

"responseType": "json"

},

"actionDataDescriptor": {

"queryData": {

"limit": {

"type": "Number",

"optional": true

}

}

}

}If the client wanted to get a list of ten users, it had to send three HTTP requests: GET /api → GET /api/actions/ListUsers → GET /api/users?limit=10.

Great, we’re done! Now let’s look together on how our bike rides.

What are its advantages over a scooter?

- We don’t have to release new versions of clients if we change the action descriptor. We are able to fix typos like “Jesus, I released /api/usrs/123, but it should have been /api/users/123” by only releasing the new server version. So we can change the API without having to modify the clients!

- It’s not dependent on the technology used! We could install the NPM library again only in the JavaScript project. We were able to use the entry point protocol in Java projects as well.

- No shared code between FE and BE. Everything is data driven.

- For the client, the usage is quite simple. It only needs to know the URL of the entry point, the name of the action and the data. In principle, the usage looks like this:

const entryPoint = new EntryPoint("https://example.com/api");

const apiAction = new ApiAction(“ListUsers”, {limit: 10});

entryPoint.execute(apiAction);- No big deal, even the fact that the limit belongs to the query string can be understood from the descriptors and does not need to be stated directly in the code, which is very nice!

What are the disadvantages?

But the solution used had its own issues… well, maybe calling them issues is a kind of understatement:

- In fact, we were only able to edit a small part of the descriptors to make the client understand it. The example shows that the /users route accepts a limit as a parameter. Only if we decided to rename this parameter to “Limit” and drop the old “limit”, the client wouldn’t be able to handle it. The client would still call limit=10 and the request would fail. So we have to go and modify the client code anyway to

new ApiAction(“ListUsers”, {Limit: 10})- The same thing happens when we have a typo in the name of the ListUsers action.

- We have not enforced in any way that our API is only available through an entry point. As a result, there is at least one client somewhere that has the URL hardcoded and is not using an entry point.

- We don’t provide any libraries for our customers to work with the API. If they want to use our API, they have to write their own client. It means that when a customer asks us for access to the API so they can call “this one route to create a user”, they have to implement our entire entry point protocol. For the customer, it’s then the decision on whether “I’ll call one stupid POST /api/users request at this address that does exactly what I want” vs “I’ll implement hundreds of lines of code to get the entry point to return that I should call POST /api/users“.

- As for the idea of caching it at the HTTP level, there’s an old joke that describes it best: There are 2 hard problems in computer science: cache invalidation, naming things, and off-by-1 errors.

- It generally added insane complexity on all levels:

- In the browser console, you see a bunch of entry point requests that mostly don’t really interest you.

- We wanted multiple different BE services to be available to the client behind a single entry point. So realistically, we have one service that acts as an entry point and behind it are several other services that the entry point asks for descriptors in real-time.

- So when a client sends a GET /api/actions/ListUsers request, the service sends three more HTTP requests to three more services to see if they know the ListUsers action and only then does it return a response to the client.

- Of course, each of these parts can break, each of these parts can return some errors that need to be responded to correctly, and thus: each of these parts adds complexity to the overall solution.

- It was kind of funny when we found out that even the idea “client reads URL from descriptor” was broken. We were never returning the whole URL, only returning the rote where the action is available. So we told our client that the entry point was available at example.com/api and then he asked for the action detail:

GET example.com/api/actions/ListUsers

{

"endpoint": {

"method": "GET",

"route": "/users",

"responseType": "json"

}

}- Now, a question for you: at what address is the ListUsers event available? Because each client has evaluated it a little bit differently ?

- We had a client that simply took the first base address example.com/api and connected it to the route, i.e. got the address example.com/api/users. ?

- Then we got the brilliant idea that there must be libraries for linking addresses, so we found one and used it: resolve(“example.com/api”, “/users”) but it returned example.com/users though (as the browser would have evaluated it if that was the target of the link). ?

- Occasionally, we had a configuration error and someone typed example.com/api as the URL entry point instead of example.com/api/ (slash at the end). Then after connecting, we got example.com/api//users (two slashes) and it didn’t work again. ?

- How did we solve it? We don’t want routes in the Czech Republic! To make it clear to the client, we decided to put the whole address instead of the route. We sat down at the keyboard and after a month of programming, the entry point started returning something like this:

{

"endpoint": {

"method": "GET",

"responseType": "json",

"url": "https://example.com/api/users"

}

}- Then just edit all the clients and it should be a job well done! ?

- “So let’s start the frontend on the other domain, right? That must work.” Every time a programmer says it has to work, it’s guaranteed not to work. We wanted our applications to be available on two domains at the same time, http://example.com and http://example2.com, but only one instance to actually run on the backend. But our entry point could only be configured for use on one domain. So when you asked the action descriptor on http://example2.com/api/actions/ListUsers , it still returned that the action was available on http://example.com /api/users ???.

- To make it worse, we have more entry points by accident of fate. We have a campaign management product that consists of several services and we have a user management product that also consists of several services. And each product has its own entry point. The original idea that there would be one entry point for all products has somehow disappeared. We have maybe ten.

- The entry point protocol was invented by us and was not standard. That doesn’t help the situation. We were familiar with OpenAPI/Swagger, but at the time it didn’t do everything we would have wanted it to do, so we decided not to use it.

- But we decided to switch to it, so our latest products don’t have an entry point, but they expose OpenAPI descriptors.

Lessons learned

Did we manage to develop the “bike” as we wanted? I think so. But someone put sand in the chain and switched the brake with the bell. Entry point solves some problems, allowing the client to easily create actions, but along with that, the entry point introduced a bunch of new problems that are not easy to fix. At the same time, this isn’t a prototype we’ve been testing for four months, but rather a thing that’s been in production for four years and we’ve burned several man-months of work on it. And what’s the lesson here?

- Use a standard solution. Even if OpenAPI didn’t meet our requirements 100%, it would probably still be a better solution than our proprietary protocol.

- Before we started implementing Entry Point, we should have sat down and defined exactly what we wanted from it. Define the API of the entire entry point and think carefully about it.

- What we didn’t realize is that this protocol is going to be used by every service we have, and therefore it’s not going to be easy to get rid of it or change the protocol in any way. We spent a lot more time on many microservice than we did on the entry point design. Yet, getting rid of the microservice is much easier than getting rid of the entry point that all our microservices use!

- Not only that, we should have defined what we wanted from it. We should have been absolutely precise about the use cases that the entry point should solve. Over time, people stopped understanding what an entry point is good for, or instead thought that an entry point solves use cases that it doesn’t really solve. We don’t have good documentation anywhere that we can go back to and say “entry point in the current implementation is supposed to solve use case X, Y and Z, but it no longer solves use case A, B and C”. We should have written down how we expect the client to use it and what it solves for them. We didn’t do any of that, instead there were different incompatible visions in different people’s heads of what it would and wouldn’t do. ¯\_(ツ)_/¯